Procedural dungeon stress test, part 2

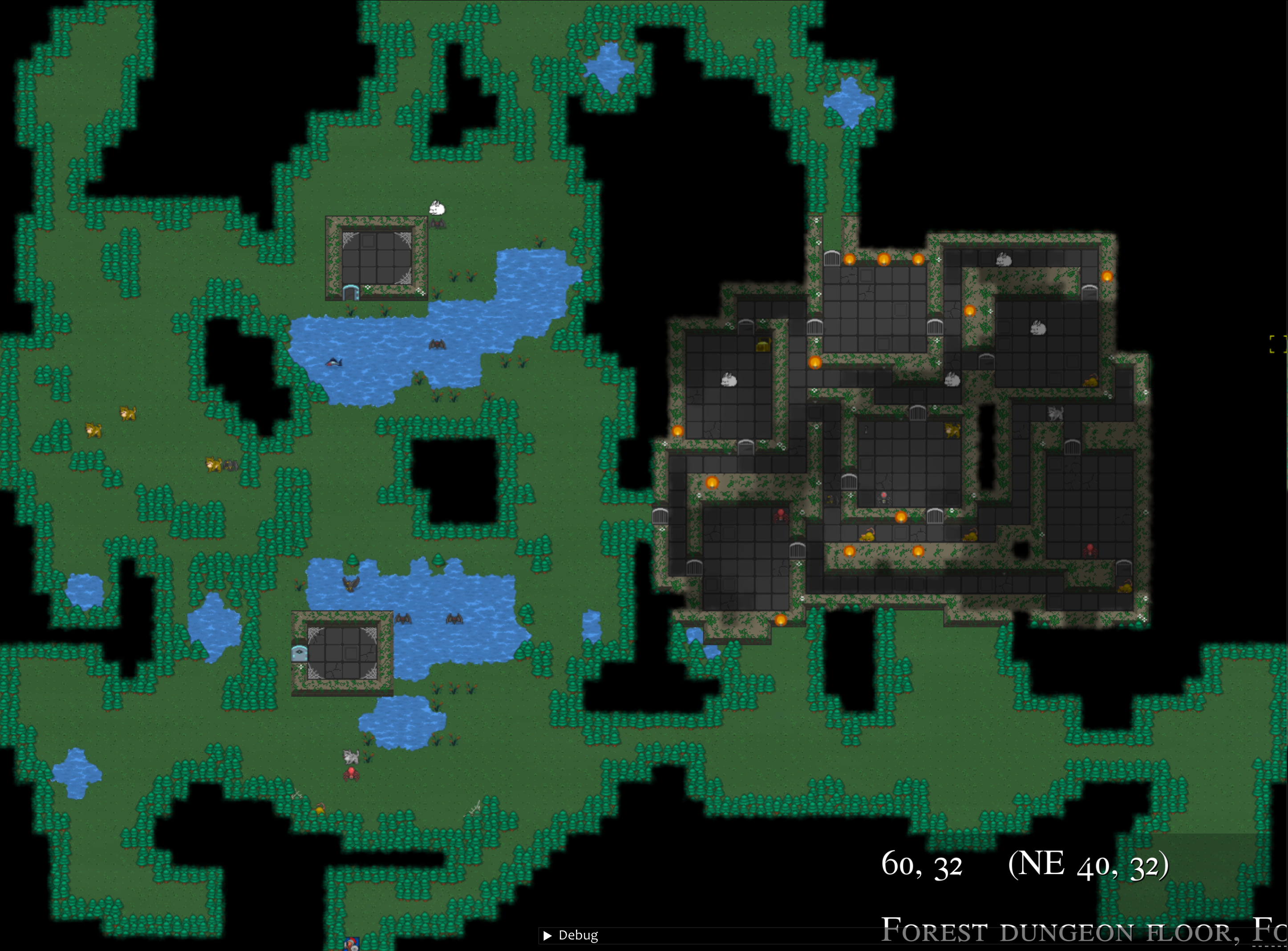

More stress testing this week! First of all, I created a special FoV mode were all potentially visible tiles are uncovered, including any visibility blocking neighbours. This results in better-looking revealed maps (imo). Picture above! Everything is still destructible, but inner blocked areas (in black) are hidden

Next was tackling the buggy map with no exits. I dusted some old code that did validation checks (connectivity, map borders, etc), I integrated that at the end of the dungeon generation process, and I got some nice validation again. This was followed by a couple (part-time) days of debugging code due to failing validations and code fixes that avoid these failures. So finally, map generation got super stable (at least for this one single preset I'm using)

Next was rerunning the test. I realized that the editor executable grows huge very quickly, and that's mostly because of all the debugger output, that includes meaty stack traces. So I set the log level to exception and tried again.

I finally got the test to complete successfully, about 4000 dungeons! The executable was at ~6GB. I immediately proceeded to save the state, because the data would be super-valuable for performance measurements, memory usage etc. A goldmine! Well, that did work out well. I got a crash in the serialization process (saving the game to the file). But why? My projections had said that the savefile would be about 170MB max. Well, I forgot that this value is the COMPRESSED LZ4 value, not the uncompressed one. But how much is the uncompressed size after 4000 levels? Well, I don't want to run the bot again, as it takes 30 minutes, I'd rather do a bit of math. I put some breakpoints here and there, and adjusted the bot to save to file every 20 levels. After about 350 levels, I got some data points for (compressed, uncompressed). For example, at 340 levels, uncompressed save file is 233MB, and compressed is 23MB. Besides being happy about compression, I'm realizing that after 4000 levels, the uncompressed file is over 2.1GB, compressing to supposedly something like 140MB. But 2.1GB exceed the max integer value, and the error that I got was "System.GC.AllocateUninitializedArray(int, bool): System.OverflowException: Arithmetic operation resulted in an overflow.". That "int" there was the proof I needed. It's memory allocation, use some bloody unsigned numbers silly C#! It's like we're not supposed to do anything intensive with it. Apparently it was a conscious design decision to limit objects at 2GB. And apparently you can override that behaviour by setting some NET configuration option. To make matters more complex, that option is set in a file that is auto-generated by Godot and I have no control over! Oh the joy. So, anyway, that's the state on that front.

I edited the bot to save every 500 dungeons visited. Last useful I got is 3500 dungeons visited, the savefile is 142MB at takes 19 seconds to load in the editor - a problem for another time! But, when loading the savefile the performance is much better than having gone through all 3500 dungeons in one go, which is quite nice.

Another thing, that needed a bit of time, but was totally worth it, was to add support to create a dungeon in-game from a json file. To clarify, the way things worked before were: when we create a "dungeon" in the overworld, it's just the specification. When we enter the dungeon, a more complex level-specification is being built on the fly, and converted to json. This json is sent to the C++ plugin where it's read, and used to instantiate the dungeon. The dungeon is a lightweight bytestream, which is received in C# and gets instantiated. What I did now was to support creating a new dungeon in the overworld that instantiates a single level using a given json. The reason I do that is that, when I identify a json that leads to validation failure in the native plugin, I fix the issues so that it passes, and then reload that json in the game to ensure everything's alright there too.

And that's all for this week, have a nice weekend!